In the previous two articles, we discussed chapter 2 of Designing Data Intensive Applications by Martin Kleppmann which discussed data models and query languages, here and here.

Now we move on to chapter 3 which discusses storage and retrieval. This chapter is pretty long so we will discuss it over a series of articles, this one will discuss the data structures commonly used for storage such as hash indexes, SSTables, LSM-trees, B-trees, etc. In later articles we will discuss more topics related to storage and retrieval, mainly, transactions processing and column-oriented storage.

Why Should I as an Application Developer care about Storage Engines?

While this might look like getting into a low level territory of dealing with database internals, application developers who don't work with database internals directly still need to understand the main differences between data structures used in the storage engines of different databases to be able to not just select the suitable database for their application business usecase, but also the suitable storage engine for their database.

In particular, there is a big difference between storage engines that are optimized for transactional workloads and those that are optimized for analytics. Also some storage engines are simple and fast but only work with a small dataset while other storage engines are way more complex but can handle loads of data efficiently.

A good example for how different storage engines can be within the same database is MySQL which has a variety of storage engines we discuss here before. MySQL has very simple storage engines like a csv engine and very complex ones like InnoDB. It also supports a variety of index types which have different speeds and limitations, we discussed this here as well.

Data Structures That Power Your Database

In this section will discuss some examples of data structures commonly used in storage engines, but let's first take a quick look at the simplest database you can build, you can actually build this using a file and 2 bash functions as follows:

#!/bin/bash

db_set () {

echo "$1,$2" >> database

}

db_get () {

grep "^$1," database | sed -e "s/^$1,//" | tail -n 1

}

These 2 functions use a file called database to store a set of comma separated key-value pairs, much like a csv file but without worrying about character escaping issues and so on.

The db_set function captures 2 arguments, key and value, and concatenates the 2 arguments with a comma in between and then appends them to the database file.

The db_get function uses the grep command to scan the database file for any row that starts with the row key (much like a primary key lookup), it then strips the value from the row and returns the last occurrence in case multiple rows matched the given key.

And it works:

$ db_set 123456 '{"name":"London","attractions":["Big Ben","London Eye"]}'

$ db_set 42 '{"name":"San Francisco","attractions":["Golden Gate Bridge"]}'

$ db_get 42

{"name":"San Francisco","attractions":["Golden Gate Bridge"]}

This style in database storage engines is known as Log Structured data files. Log data files are append only files where new entries are only appended to the end of the file.

There are three important notes to take away from this short example:

- The

db_setfunction is fast as it gets, it basically appends a new value to the end of the database file with any extra logic. - Because of the simplicity of the writing logic, we only append to the end of a file, we have to model updates as new writes, so for example if the value of some key needs to be updated, this will translate into a new entry with the same key and the new updated value. That's why the

db_getmethod usestail -n 1to fetch the last matched occurrence of the key. This also introduces another complexity point of how to make sure this database file doesn't grow needlessly with frequent updates, so some compaction logic will have to be implemented in order to reclaim this space. Same will happen with deletes (assuming we use a deleted flag to mark deleted rows). - The

db_getfunction is very bad, it scans the entire database file with every read. In algorithmic terms, it has a complexity of \(O(n)\) which means that if the database file grew to double the size, it will take twice as long to serve a read which is a very unacceptable characteristic of a database.

In order to efficiently find the value for a particular key in the database, we need a different data structure: an index. In this article we will look at a range of indexing structures and see how they compare; the general idea behind them is to keep some additional metadata on the side, which acts as a signpost and helps you to locate the data you want. If you want to search the same data in several different ways, you may need several different indexes on different parts of the data.

Additional structures such as indexes slow down writes because those indexes will have to be updated accordingly, but on the other hand, they speed up reads so this trade off is important to know.

Now let's take a look at some famous data structures used to build indexes in storage engines.

Hash Indexes

Hash indexes are basically key-value hash maps. A hash map is a data structure which encodes (hashes) a key and maps it to its corresponding value so that when the data for this key is queried, that key is hashed using the same function and the value is retrieved in \(O(1)\) in the simplest case (without worrying about collisions).

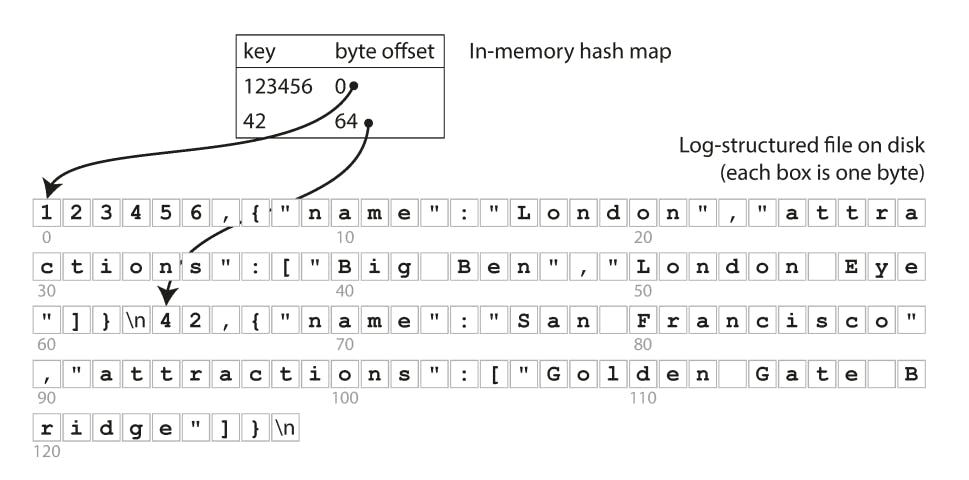

Let’s say our data storage is just appending to a file, as in the preceding example. Then the simplest possible indexing strategy is this: keep an in-memory hash map where every key is mapped to a byte offset in the data file—the location at which the value can be found, as illustrated in the following diagram:

Whenever you append a new key-value pair to the file, you also update the hash map to reflect the offset of the data you just wrote (this works both for inserting new keys and for updating existing keys). When you want to look up a value, use the hash map to find the offset in the data file, seek to that location and read the value.

While this seems very simplistic, it works and it's used in many data stores, not just databases. I discussed a storage system at facebook called Haystack which uses a very similar idea, if you're interested, check it here.

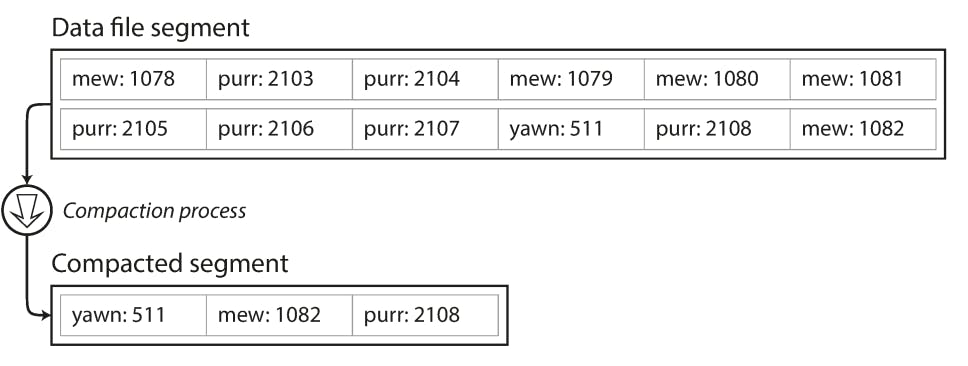

There are many optimizations to reclaim needlessly occupied space by log files, for example, we can use multiple log files, so when one log file goes out of capacity, it gets frozen and new writes are done in a new log file. Frozen log files can later be compacted (duplicate keys are removed and only the most recent value of a key is kept). The following diagram shows an example for this:

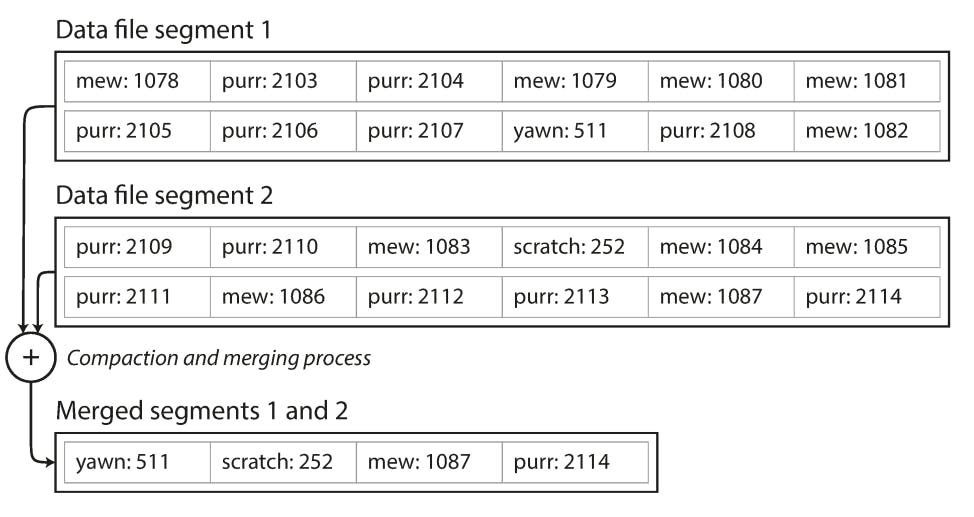

This can be further optimized by merging compacted segments together because a compacted segment might end up being very small than the segment capacity. The the following diagram shows an example of how this can be done:

Each segment now has its own in-memory hash table, mapping keys to file offsets. In order to find the value for a key, we first check the most recent segment’s hash map; if the key is not present we check the second-most-recent segment, and so on. The merging process keeps the number of segments small, so lookups don’t need to check many hash maps.

There are a lot of other problems that have to be handled in order to have an efficient hash index, for example, file format (csv is not the best and binary logs are better), crash recovery (if the in-memory hashmap is lost it will take a lot of time for it be repopulated so snapshots of the index are saved to disk to speed up crash recovery), etc.

However, the hash table index also has limitations:

- The hash table must fit in memory, so if you have a very large number of keys, you’re out of luck. In principle, you could maintain a hash map on disk, but unfortunately it is difficult to make an on-disk hash map perform well. It requires a lot of random access I/O, it is expensive to grow when it becomes full and hash collisions require fiddly logic.

- Range queries are not efficient. For example, you cannot easily scan over all keys between kitty00000 and kitty99999 —you’d have to look up each key individually in the hash maps.

SSTables and LSM-Trees

As we saw in hash tables, new logs are added to the end of the table, so logs at the end of the table take precedence over logs that came earlier in the table and this is how hash tables detect updates. Other than that, the order of keys in the table is irrelevant. As we explained in the last part of the previous section, hash tables have two major limitations, namely, having to entirely fit in memory and not being able to serve range queries efficiently. Those two limitations, in my opinion, render it completely ineffective in any general purpose database system.

Now let's consider this, what if we can store all segments on disk and only store a few keys in memory and build a mechanism that would allow us to use the few keys stored in memory to know which segment(s) to load from disk in search of some key? That would eliminate the first limitation of having to fit all segments in memory.

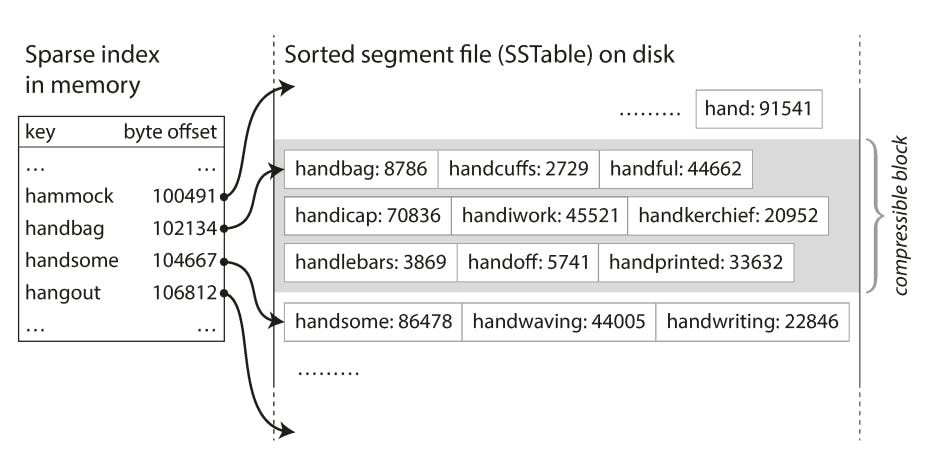

This is exactly what SSTables do, they store all segments on disk and only keep a few keys in memory, but with a minor twist, those keys kept in memory will have to be sorted. The idea is that if you have sorted keys in memory and you're looking for a specific key, you will just have to figure out the last key that's smaller than the lookup key and the first key that's bigger than the lookup key, then you can scan all segment(s) that fall in between those 2 keys from disk. This is how SSTables got their name, it stands for sorted sparse tables, because the keys are sorted and they are sparse in the sense that we store a key for every group of segments.

Let's take an example to understand this better:

In the previous diagram, let's say we are looking for the key handiwork, because the keys in the SSTable are sorted, we know it must fall between handbag and handsome, so we can start from the offset of handbag and read all segments until we reach handsome to locate the needed key or determine if it doesn't exist at all. It's clear that the less sparse the table is, the faster we will be able to look up keys but the larger this table will get and becomes harder to fit in memory.

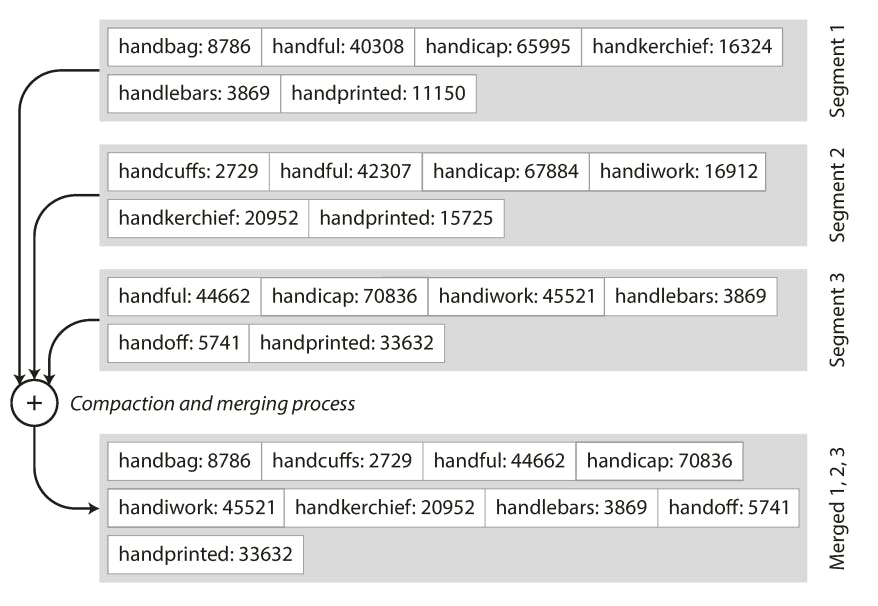

This new structure also helps us to solve the second limitation of not being able to perform range queries efficiently. Since we sort keys now, we can make segments also sorted by key during compaction. Notice that during compaction, we can remove duplicate keys (perform updates) while writing the new compacted segment. This can be easily done while segments are resident on disk using an algorithm that's very similar to a normal merge sort as follows:

We start from the beginning of each sorted segment and read the first key from each of them, then choose the lowest key based on the sort order and write that key in the new compacted segment file. This is pretty much what happens in the previous diagram.

Now since compacted segments are also sorted by key, we can easily serve range queries. Using the same example we gave for looking up the value of the key, we can serve a query that says, fetch all records that are greater than handbag and less than handsome because we know for sure that all segments between those 2 keys will have to fall between this range and they are internally sorted.

Constructing and maintaining SSTables

So far so good, but we didn't answer a very important question. Our main assumption in log structured storage engines is that we just append a new log to the file (at the end) and this, naturally, produces an unsorted segment of keys. But the main assumption in SSTables is that keys are sorted which suggests that now when we add new keys we don't just append to the end of the file, rather, we will have to find the correct location for the new key in that the log file which is no longer just appending new keys to the end of the file.

In order to be able to do this efficiently, a normal log structure won't work and we will have to use a data structure that allows to insert keys at random order then be able to read them back in sorted order. Luckily such data structures exist, for example, AVL trees or red-black trees.

We can now make our storage engine work as follows:

- When a write comes in, add it to an in-memory balanced tree data structure (for example, a red-black tree). This in-memory tree is sometimes called a memtable.

- When the memtable gets bigger than some threshold—typically a few megabytes —write it out to disk as an SSTable file. This can be done efficiently because the tree already maintains the key-value pairs sorted by key. The new SSTable file becomes the most recent segment of the database. While the SSTable is being written out to disk, writes can continue to a new memtable instance.

- In order to serve a read request, first try to find the key in the memtable, then in the most recent on-disk segment, then in the next-older segment, etc.

- From time to time, run a merging and compaction process in the background to combine segment files and to discard overwritten or deleted values.

This scheme looks good so far, the only limitation that's clear now, is what if the database crashes? The recent writes that are still in the memtable but not yet persisted on disk will be lost. To solve this, most databases maintain what's called a write-ahead-log (WAL) which is a separate file (on disk) to which new writes are written (in the order they come, just appends). Now if the database crashes, the WAL file can be read and the recent writes that were in the memtable but not on disk can be recovered. In order to keep this WAL file at a constant size, when each memtable instance is flushed the disk, the corresponding WAL file is deleted because it's no longer needed.

Performance optimizations

It's worth mentioning that storage engines that are built on maintaining and compacting SSTables are called LSM-trees (log structured merge-trees).

There are a couple of performance optimizations that can be applied to LSM trees, mainly:

- The LSM-tree algorithm can be slow when looking up keys that do not exist in the database: you have to check the memtable, then the segments all the way back to the oldest (possibly having to read from disk for each one) before you can be sure that the key does not exist. In order to optimize this kind of access, storage engines often use additional Bloom filters (A Bloom filter is a memory-efficient data structure for approximating the contents of a set. It can tell you if a key does not appear in the database and thus saves many unnecessary disk reads for nonexistent keys).

- There are also different strategies to determine the order and timing of how SSTables are compacted and merged. One strategy is called size-tiered compaction in which newer and smaller SSTables are successively merged into older and larger SSTables. Another strategy is called leveled compaction in which the key range is split up into smaller SSTables and older data is moved into separate “levels,” which allows the compaction to proceed more incrementally and use less disk space.

Even though there are many subtleties, the basic idea of LSM-trees—keeping a cascade of SSTables that are merged in the background—is simple and effective. Even when the dataset is much bigger than the available memory it continues to work well. Since data is stored in sorted order, you can efficiently perform range queries (scanning all keys above some minimum and up to some maximum) and because the disk writes are sequential the LSM-tree can support remarkably high write throughput.

B-Trees

B-trees are the most commonly used indexing structure. You can find it used in most relational and even some non-relational databases. Make sure to check my article on indexing in MySQL which talks about B-tree indexes in MySQL, how they are constructed and how to leverage them to optimize your queries.

Like SSTables, B-trees keep key-value pairs sorted by key, which allows efficient key- value lookups and range queries. But that’s where the similarity ends: B-trees have a very different design philosophy.

The log-structured indexes we saw earlier break the database down into variable-size segments, typically several megabytes or more in size, and always write a segment sequentially. By contrast, B-trees break the database down into fixed-size blocks or pages, traditionally 4 KB in size (sometimes bigger) and read or write one page at a time. This design corresponds more closely to the underlying hardware, as disks are also arranged in fixed-size blocks.

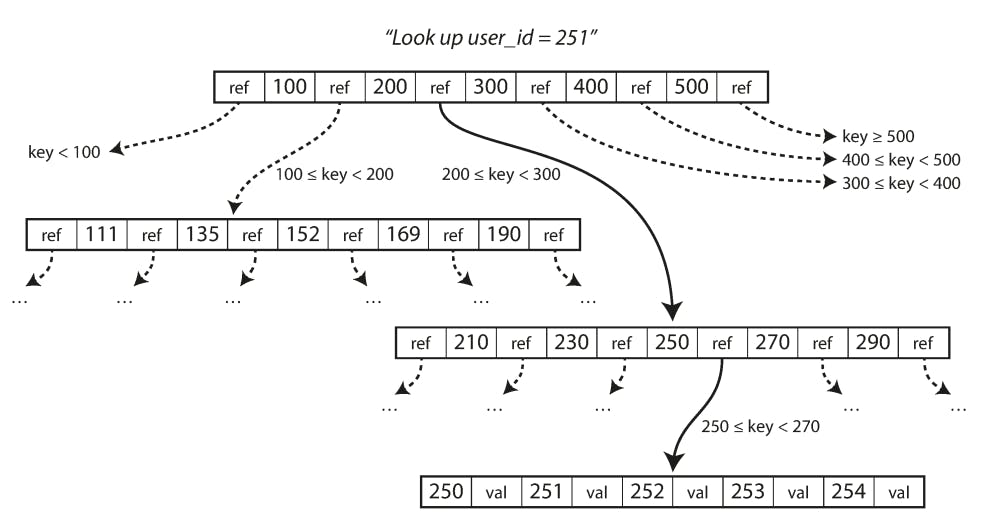

In B-trees, one page is designated as the root of the B-tree; whenever you want to look up a key in the index, you start here. The page contains several keys and references to child pages. Each child is responsible for a continuous range of keys and the keys between the references indicate where the boundaries between those ranges lie.

So for example, in the previous diagram, let's say we are looking for the user with id = 251, we start the root of the b-tree and we follow the ref contain the range [200-300] because 251 falls between this range. The in the child page, we follow the range [250, 270] because 251 falls between this smaller range as well, and so on, we keep traversing the tree down to a leaf node containing user_id = 251. It's worth mentioning that the leaf node will contain the value being indexed. In some storage engines like InnoDB which is very common in MySQL, the leaf node will also contain the primary key in case queries needed more data that were not in the index (i.e. the index is not a covering index). Some other storage engines like MyISAM which is also somewhat popular in MySQL, the leaf node will contain the physical address of this record.

The number of references to child pages in one page of the B-tree is called the branching factor. In practice, the branching factor depends on the amount of space required to store the page references and the range boundaries, but typically it is several hundred.

If you want to update the value for an existing key in a B-tree, you search for the leaf page containing that key, change the value in that page and write the page back to disk (any references to that page remain valid). If you want to add a new key, you need to find the page whose range encompasses the new key and add it to that page. If there isn’t enough free space in the page to accommodate the new key, it is split into two half-full pages, and the parent page is updated to account for the new subdivision of key ranges.

This algorithm ensures that the tree remains balanced: a B-tree with n keys always has a depth of \(O(log n)\). Most databases can fit into a B-tree that is three or four levels deep, so you don’t need to follow many page references to find the page you are looking for. (A four-level tree of 4 KB pages with a branching factor of 500 can store up to 256 TB.)

Making B-trees reliable

Some operations done in a B-tree, like overwriting a page or overwriting several pages during a page split, are dangerous because the database could crash during those operations which might lead to corrupt pages or even orphan pages (pages with no parent).

In order to account for this, B-trees employ a similar mechanism to what we discussed earlier, it uses a WAL file, which is as we mentioned before, an append-only file to which every B-tree modification must be written before it can be applied to the pages of the tree itself. When the database comes back up after a crash, this log is used to restore the B-tree back to a consistent state. In B-trees, this is also called redo logs or binary logs which are in a database like MySQL are the basis for persistence, i.e. a modification is persisted only if its redo log is written to disk, ignoring any further actual page modifications that might happen. This is a very interesting idea and leveraged in building replicated MySQL systems because it allows you replicate only the redo-logs not the actual data pages. Be sure to check my article series on Aurora (AWS's managed MySQL which is entirely built on this idea).

An additional complication of updating pages in place is that careful concurrency control is required if multiple threads are going to access the B-tree at the same time, otherwise a thread may see the tree in an inconsistent state. This is typically done by protecting the tree’s data structures with latches (lightweight locks).

B-tree optimizations

As B-trees have been around for so long, it’s not surprising that many optimizations have been developed over the years. To mention just a few:

- Instead of overwriting pages and maintaining a WAL for crash recovery, some databases (like LMDB) use a copy-on-write scheme. A modified page is written to a different location, and a new version of the parent pages in the tree is created, pointing at the new location. This approach is also useful for concurrency control.

- We can save space in pages by not storing the entire key, but abbreviating it. Especially in pages on the interior of the tree, keys only need to provide enough information to act as boundaries between key ranges. Packing more keys into a page allows the tree to have a higher branching factor and thus fewer levels which makes querying faster.

- Additional pointers have been added to the tree. For example, each leaf page may have references to its sibling pages to the left and right, which allows scanning keys in order without jumping back to parent pages. These are called B+-trees.

Summary

As application developers, it's beneficial to understand the internals of storage engines used to be able to know which ones are a correct fit for our use cases. There many different storage engines using different data structures. We discussed a view of these data structures, namely, hash tables, SSTables, LSM trees and B-trees. There are many other data structures used for indexing, for example, full-text indexes and fuzzy indexes.

In the next article, we will cover a new part of this chapter which will will discuss Transaction Processing and Column-Oriented Storage.